Rewards in Multi Agent Reinforcement learning

The reward: the ‘Supervised’ part of MARL

One of the virtues of (Multi Agent) Reinforcement Learning compared to Supervised Learning, another Artificial Intelligence paradigm, is that there is no ‘human tweaking’ involved with predefined correlations or the human labeling of data points.

Nevertheless, the reward structure within (Multi Agent) Reinforcement Learning is a subjective component with human intervention which can significantly alter or ‘tweak’ simulation outcomes. This can be mitigated by implementing the reward structure as simple or ‘sparse’ as possible. This however cannot fully eradicate biased human intervention.

The simulation results of the Predator, Prey, Grass Project give hopeful and reasonable results with sparse rewards. That is if agents only get an individual reward when reproducing, some desired emergent behavior (eating, fight/flight, ambushing) arise.

However, we feel that is is necessary to tweak the reward structure beyond the reproduction reward in order to obtain more realistic or ‘natural’ results. For instance, in the configuration with only rewards for reproducing, Prey tends to flee Predators but not as much as one would expect in the ‘real’ world. Therefore, we additionally introduce a (negative) reward for Prey who get caught by Predators. This leads to a more realistic fleeing behavior of Prey.

The Reward Structure as the ‘Nature’ component in MARL

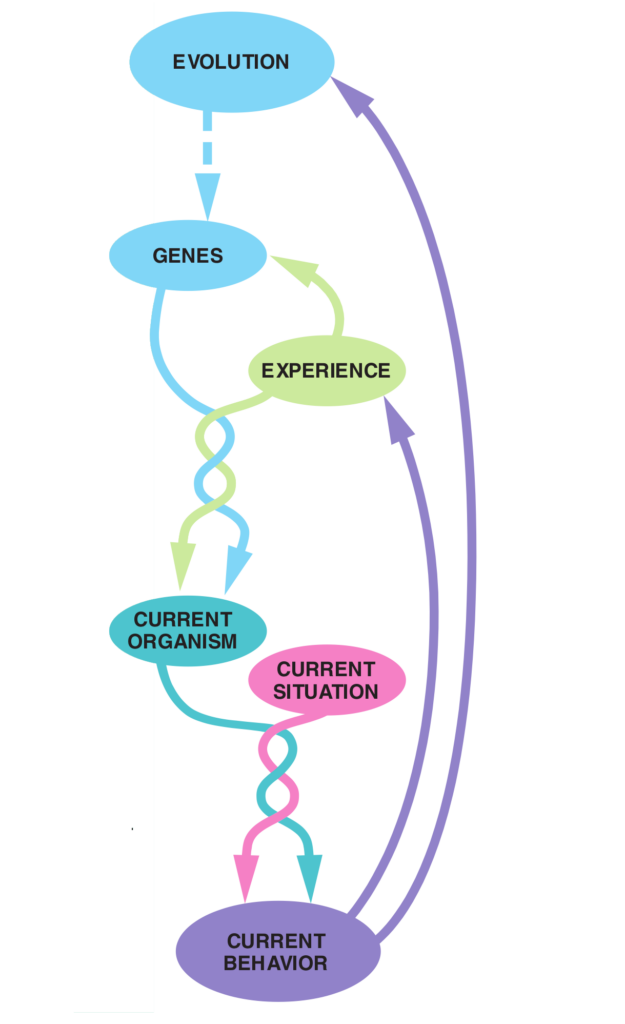

Since Predator and Prey start from scratch (‘Blank Slate’), all of their behavior has to be learned during training. In the real world humans genetically inherit traits form millions of years of adaptive/selective evolution. With our current understanding and algorithms we feel it is impossible that human behavior patterns would arise from scratch. Therefore, one has to impose a reward up front which structure kind of serves as the inherited or ‘Nature’ component in the model, whereas on the other hand the MARL simulation serves as the ‘Nurture’ component of human behavior.